Back when I worked at Google, from 2006-2011, I spent many a December on my 20% project, the Santa Tracker. In those days, the tracker was a joint collaboration with NORAD, with the Googlers focused on making the map that showed Santa's journey across the world. I was brought in for my expertise with the Google Maps API (as my day job was Maps API advocacy), and our small team also included an engineer, an SRE, and a marketing director. It was a formative experience for me, since it was my first time working directly on a consumer-facing website. Here are the three incidents that stuck with me the most...

Nearly crashing Google's servers

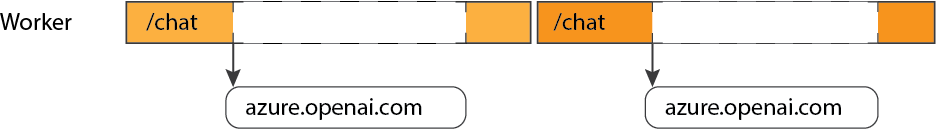

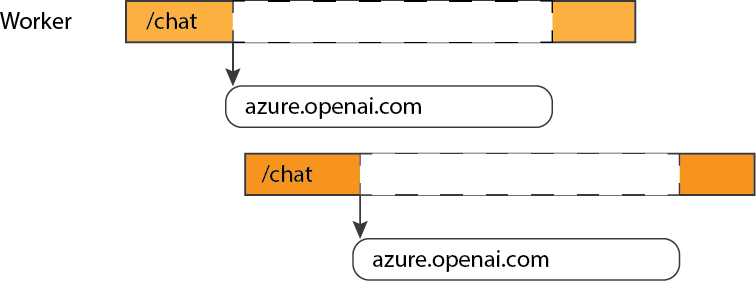

Here's what the tracker map looked like:

The marker is a Santa icon, it's positioned on top of his current location, and there's a sparkly trail showing his previous locations, so that families could see his trajectory.

We programmed the map so that Santa's moves were coordinated globally: we knew ahead of time when Santa should be in each location, and when the browser time ticked forward to a matching time, the Santa marker hopped to its next location. If 1 million people had the map open, they'd all see Santa move at the same time.

That hop was entirely coded in JavaScript, just a re-positioning of map markers, so it shouldn't have affected the Google servers, right? But our SRE was seeing massive spikes in the server's usage graphs every 5 minutes, during Santa's hops, and was very concerned the servers wouldn't be able to handle increased traffic once the rest of the world tuned in (Santa always started in Australia/Japan and moved west).

So what could cause those spikes? We thought at first it could be the map tiles, since some movements could pan the map enough to load in more tiles... but our map was fairly zoomed out, and most nearby tiles would have been loaded in already.

It was the sparkly trail! My brilliant addition for that year was about to crash Google's servers. The trail was a collection of multiple animated GIFs, and due to the way I'd coded it, the browser made a new img tag for each of them on each hop. And, as you may have guessed by now, there were no caching headers on those GIFs, and no CDN hosting them. Every open map was making six separate HTTP requests at the exact same time. Eek!

Our SRE quickly added in caching headers, so that the browsers would store the images after initial page load, and the Google servers were happy again.

Lesson learned: Always audit your cache headers!

Leaking Santa's data

How did the map know which location Santa should visit next? Well, if a 4 year old is reading this, it sent a request to Santa's sleigh's GPS. For the rest of you, I'll reveal the amazing technology backing the map: a Google spreadsheet.

We coordinated everything via a spreadsheet, and even used scripts inside the sheet to verify the optimal ordering of locations. We needed Santa to visit each of the locations before 10 pm in the local time zone, since one of goals of the tracker is to help parents get kids to bed by showing them that Santa's on his way. That meant a lot of zig zagging north to south, and some back and forth zig zagging to accomodate for time zone differences across countries.

We published that spreadsheet, so that the webpage could fetch its JSON feed. I think there were some years that we converted the spreadsheet to a straight JSON object in a js file, but there was at least one year where we fetched the sheet directly. That gave us the advantage of being able to easily update the data for users loading the map later.

We worried a bit that someone would see the spreadsheet and publish the locations, spoiling the surprise for fellow map watchers, but would someone really want to ruin the magic of Xmas like that?

Yes, yes, they would! We discovered somehow (perhaps via a Google alert) that a developer had written a blog post in Japanese describing their discovery of Santa's future locations in a neatly tabulated format. Fortunately, the post attracted little attention, at least in the English-speaking news that we could see.

Lesson learned: Security by obscurity doesn't work if your code and network requests can be easily viewed.

Angering an entire country with my ignorance

This is the most embarrassing, so please try to forgive me.

Background: I grew up in Syracuse, New York. I have fond memories of taking road trips with my family to see Niagara Falls in Toronto. The falls were breathtaking every time.

Fast forward 15 years: I'd been monitoring the map for 20 hours by the time Santa made it to the Americas, so I was pretty tired. I spent a lot of those hours responding to emails sent to Santa, mostly from adorable kids with their wishlists, but also a few from parents troubleshooting map issues. I loved answering those emails as PamElfa, my elf persona.

Suddenly, after Santa hopped to Toronto, we got a flood of angry emails. Why, they rightly wanted to know, did the info window popup say "Toronto, US" instead of "Toronto, CA"?

Oops. I had apparently managed to become a full-grown adult without realizing that Toronto is in an entirely different country. I hadn't remembered border crossing in my memories, so I had come to think that Toronto was in New York, or that at least half of it was. (How would that even work?? Sigh.)

I fixed that row in the data, but thousands had already seen the error of my ways, so I spent hours sending apology emails to justifiably upset Canadians (many of whom were quite kind about my mistake). So sorry, again, Canada!

Lesson learned: Double-check geopolitical data, especially when it comes to what cities belong to what countries.